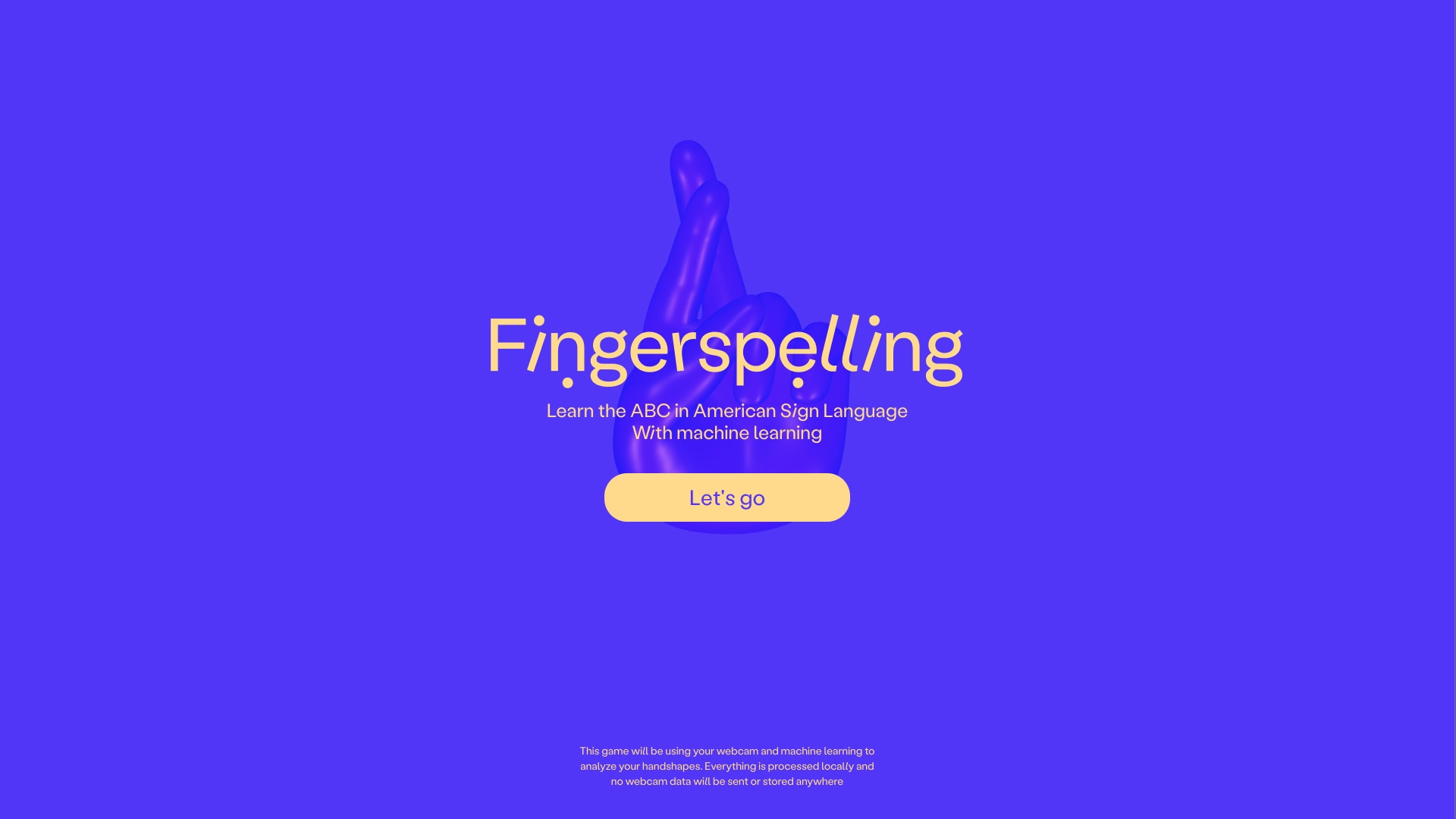

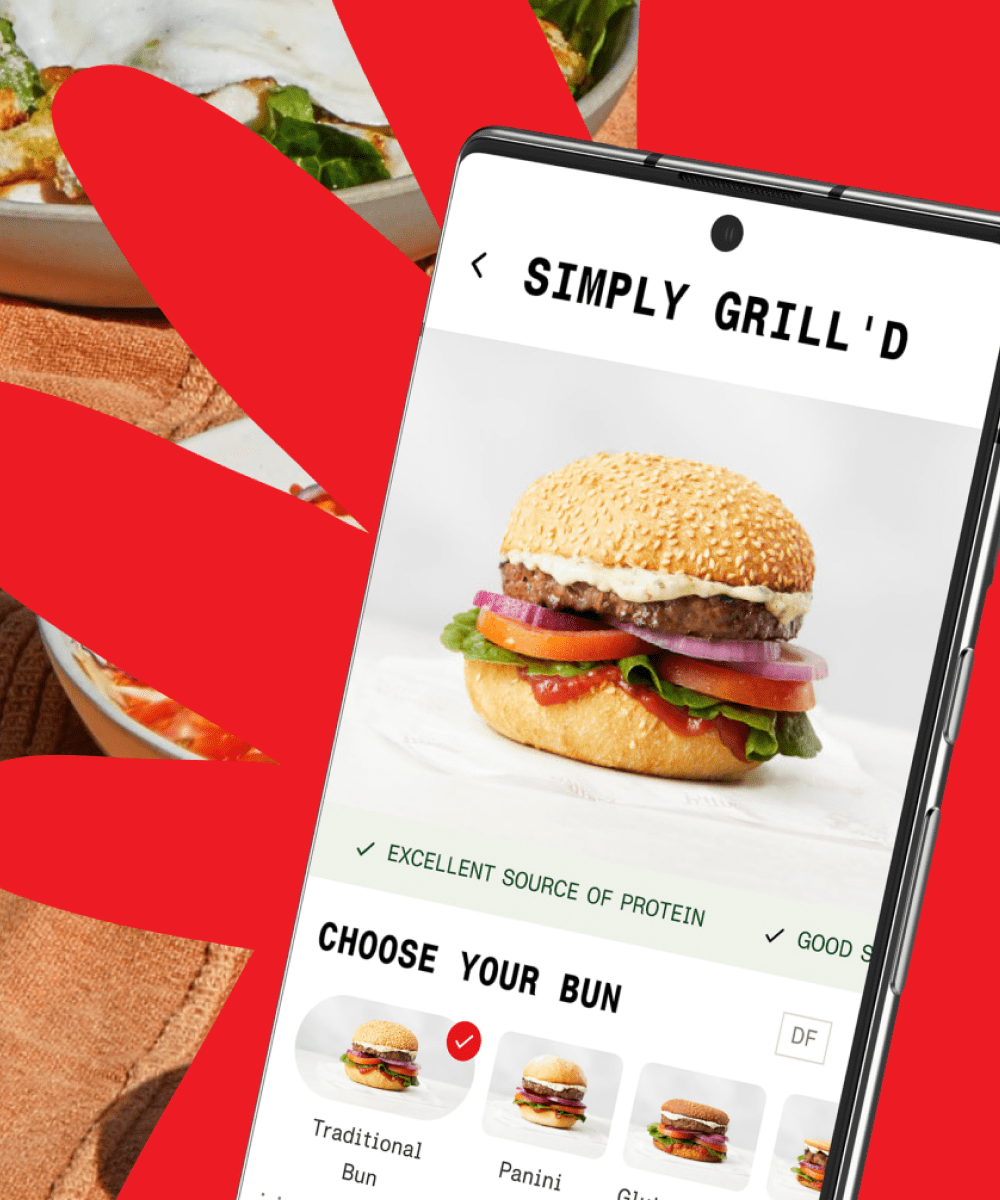

culture

DEPT® solves the problems of tomorrow, today.

As a full-service digital agency, we keep brands ahead through insight, collaboration, tackling problems fast, remaining local, and harnessing our coveted global teams when needed. We guide our clients to anticipate what others can’t.

How we do it